TL;DR

- Problem: End-to-end VLAs often lose reliable language–vision grounding in real clutter (distractors, absent targets, background shifts, unseen objects).

- Idea: OBEYED-VLA decouples perception from control using frozen VLM-based object-centric grounding plus masked-depth geometric grounding, then fine-tunes a VLA only on clean single-object demos.

- Results: In real-world scenarios, OBEYED-VLA boosts success across clutter, absent-target, OOD backgrounds, and unseen-object scenes; ablations confirm both semantic grounding and depth cues are key contributors.

Abstract

Recent Vision–Language–Action (VLA) models make strong gains by post-training large Vision–Language Models for action prediction, but most treat perception and control as a single monolithic pipeline optimized purely for action. This end-to-end setup erodes language-conditioned visual grounding: in real-world trials, policies over-grasp when the target is absent, get distracted by clutter, and overfit to background appearance, degrading performance on realistic tabletops with distractors, background shifts, and unseen objects.

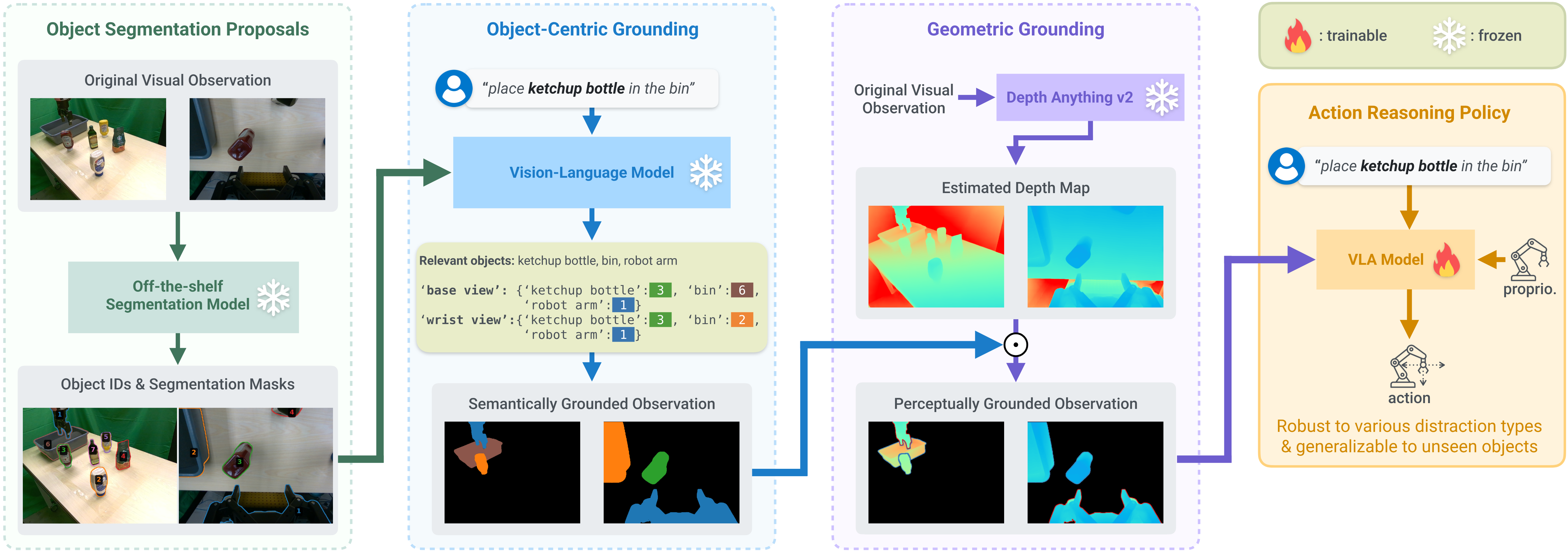

We propose OBEYED-VLA (OBject-centric and gEometrY groundED VLA), which explicitly disentangles perceptual grounding from action reasoning. Instead of operating on raw RGB, OBEYED-VLA augments VLAs with a perception module that grounds multi-view inputs into task-conditioned, object-centric, geometry-aware observations. A VLM-based object-centric grounding stage selects task-relevant regions across camera views, and a complementary geometric grounding stage emphasizes the 3D structure of these objects over appearance. The grounded views feed a pretrained VLA policy that we fine-tune only on single-object demonstrations captured without clutter or non-target objects.

On a real-world UR10e tabletop, OBEYED-VLA markedly improves robustness over strong VLA baselines across four challenging regimes and multiple difficulty levels: distractor objects, absent-target rejection, background appearance shifts, and cluttered manipulation of unseen objects. Ablations show both semantic and geometry-aware grounding are critical. Making perception an explicit, object-centric component strengthens and generalizes VLA-based robotic manipulation.

Approach

We take synchronized base- and wrist-view RGB observations and first run a real-time segmentation model to obtain object-level mask proposals in each view. Using set-of-mark prompting, a VLM parses the instruction, selects the task-relevant masks in the base view, and then matches those objects to the corresponding wrist-view regions via reference-crop–conditioned cross-view grounding. We use the selected masks to suppress all irrelevant pixels, producing object-centric views that retain only instruction-relevant objects (and the bin/robot as needed). Next, we estimate depth from each view and apply the same masks to form geometry-focused (masked-depth) observations that emphasize 3D structure while discarding appearance cues. These perceptually grounded views, together with the language instruction and proprioception, are fed into a pretrained VLA backbone that we fine-tune on clean single-object demonstrations while keeping the grounding modules frozen.

Experiments

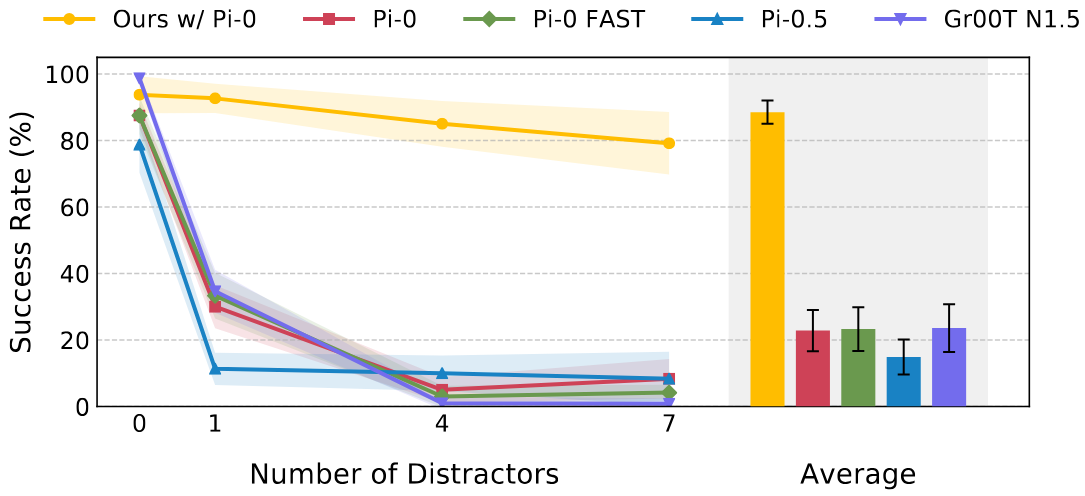

Cluttered Scenes with Distractor Objects

OBEYED-VLA consistently maintains attention on the instructed target even with 1 to 7 distractors, whereas end-to-end VLA baselines are often diverted to task-irrelevant objects and degrade sharply as clutter increases. With object- and geometry-grounded inputs, OBEYED-VLA sustains roughly 80 to 90 percent success under heavy clutter, indicating that explicit perceptual grounding is the key driver of reliable language following rather than additional action-centric tuning.

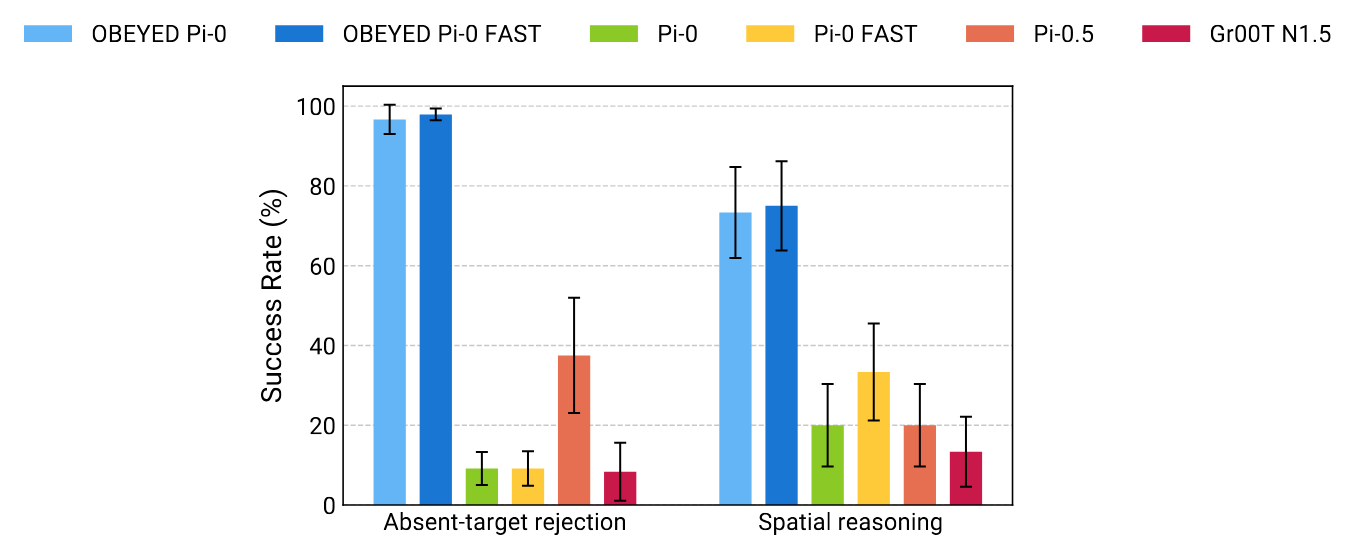

Spatial Reasoning

”place object on the left in the bin”

”place object in the middle in the bin”

”place object on the right in the bin”

With purely relational prompts (“left/middle/right”), OBEYED-VLA’s cross-view grounding disambiguates partially visible objects, driving large gains over Pi-0/FAST. Base-view anchors keep wrist-view grounding from drifting to the most salient blob, so spatial picks stay reliably correct.

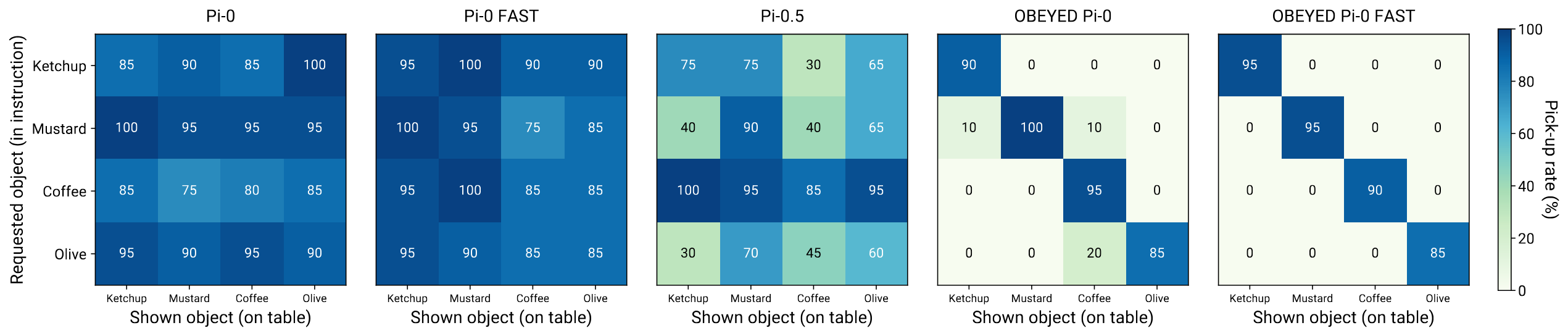

Absent-Target Rejection

”pick up the mustard bottle and place it in the bin”

When the instruction is infeasible (target missing), OBEYED-VLA almost always abstains. The above heatmaps from OBEYED Pi-0 and OBEVED Pi-0 FAST confirm this by showing near-zero pick-up rates on off-diagonal requested versus shown pairs, while end-to-end baselines retain large off-diagonal mass and still grasp more than 75 percent of the time.

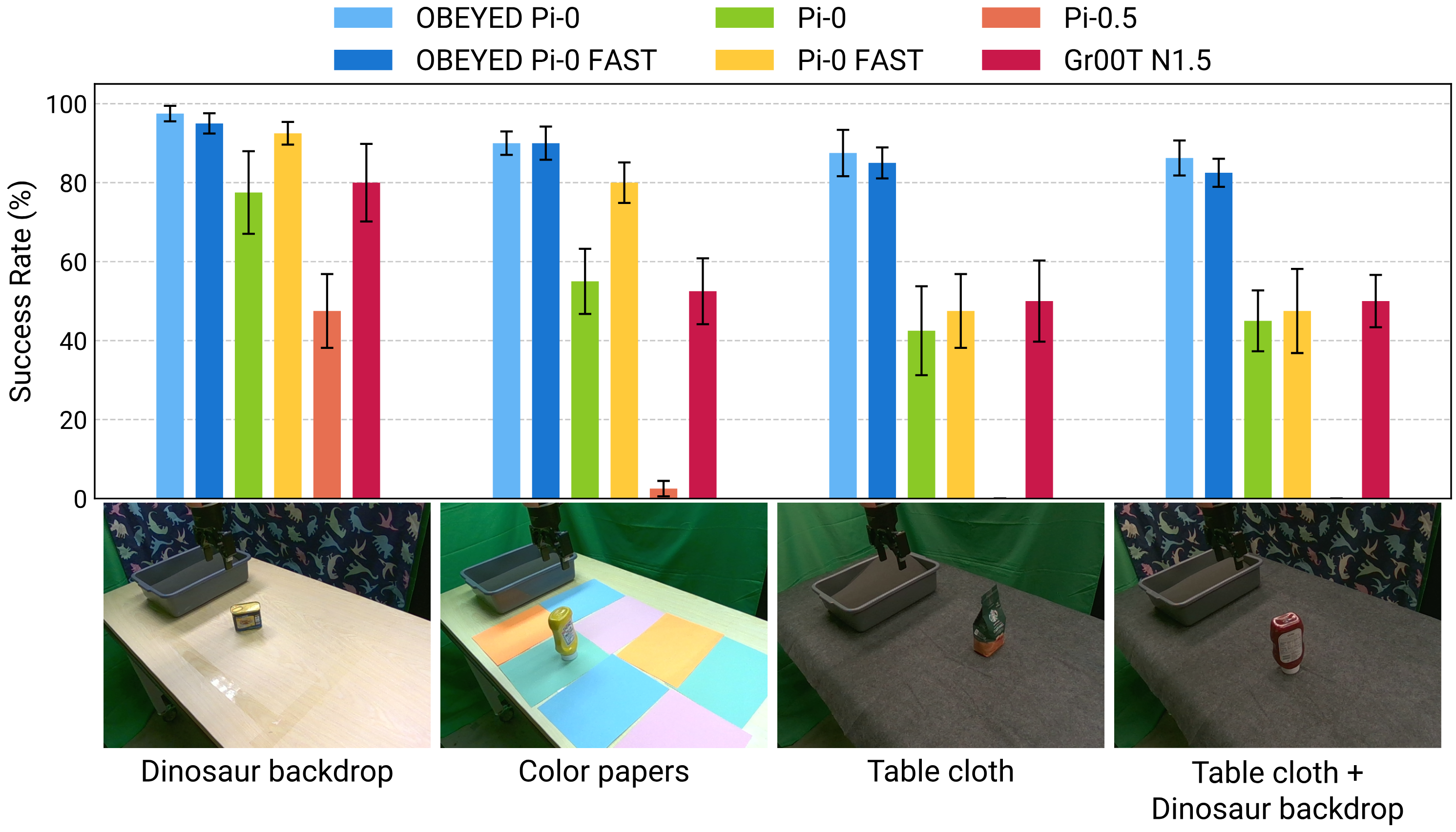

Background Appearance Shift

”place the instructed item despite background shift”

Swapping tablecloths, backdrops, or both barely dents OBEYED-VLA, while baselines lose 10 to 30 points. The grounded inputs suppress background textures, so the policy does not overfit to new colors or patterns. Appearance shifts are handled by filtering out background variation rather than relying on the policy to generalize from action tuning alone.

Unseen Objects in Cluttered Scenes

Even when every item is novel, OBEYED-VLA keeps success high while baselines crater. Geometry-aware grounding decouples grasp decisions from texture and color priors learned on seen objects, so novel bottles and bags still map cleanly to instructions, enabling zero-shot object generalization without synthetic clutter data.

BibTeX citation

@misc{obeyedvla, author = "{Khoa Vo and Taisei Hanyu and Yuki Ikebe and Trong Thang Pham and Nhat Chung and Minh Nhat Vu and Duy Nguyen Ho Minh and Anh Nguyen and Anthony Gunderman and Chase Rainwater and Ngan Le}", title = "Clutter-Resistant Vision–Language–Action Models through Object-Centric and Geometry Grounding", year = "2025",}